LHC Computing Grid

Experiment Software Installation in LCG-2

Manuals series

| Document identifier: | Non applicable |

|---|---|

| Date: | 22 July 2005 |

| Author: | Roberto Santinelli, Simone Campana |

| Document status: | PUBLIC |

| Version: | 1.0 |

| EDMS id: | None |

| Section: | LCG Experiment Integration and Support |

| Issue | Item | Reason for Change |

| 22/07/05 | v1.0 | First version |

In [R1], a collection of official requirements for the procedure to install software on LCG-2 nodes from both the experiments and the site administrators is given. In the same document, a possible approach to the problem is described. This approach represents the starting point of the mechanism described in this document.

The Experiment Software Manager (ESM) is the member of the experiment VO entitled to install Application Software in the different sites. The ESM can manage (install, validate, remove...) Experiment Software on a site at any time through a normal Grid job, without previous communication to the site administrators. Such job has in general no scheduling priorities and will be treated as any other job of the same VO in the same queue. There would be therefore a delay in the operation if the queue is busy.

The site provides a dedicated space where each supported VO can install or remove software. The amount of available space must be negotiated between the VO and the site.

An environmental variable holds the path for the location of a such space. Its format is the following:

VO_<name_of_VO>_SW_DIR

Different site configurations yield different scenarios for software management:

Once the software is installed, the ESM can perform the validation.

The validation is meant as a process or a series of processes and procedures that verify(ies) the installation of the software. It can be performed in the same job, after installation (validation on the fly) or later on, via a dedicated job.

If the ESM judges that the software is correctly installed, it publishes in the

Information System (IS) the tag which identifies unequivocally the software.

Such tag is added to the

GlueHostApplicationSoftwareRunTimeEnvironment attribute of the

IS, using the GRIS running in the CE. Jobs requesting for a particular piece of

software can be directed to the appropriate CE just by setting special requirements on the JDL.

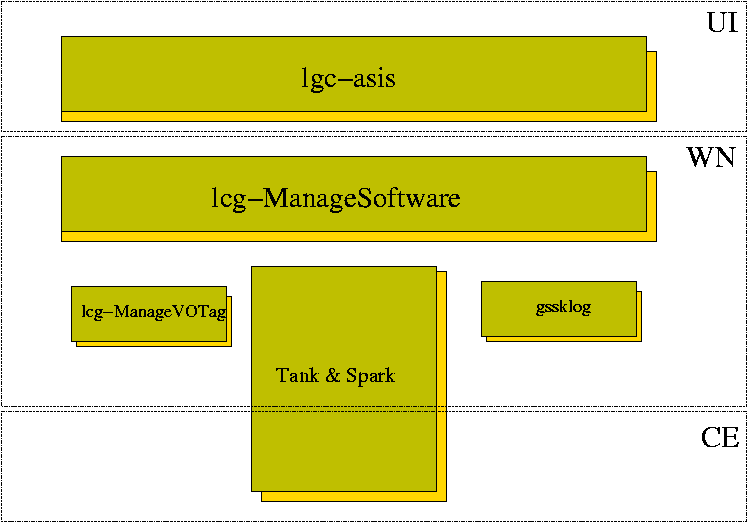

LCG has implemented the schema described above with a three layers software as summarized in Figure 1:

|

The top layer, lcg-asis, is the friendly interface that can be used by the ESM to install/validate or remove software on one or more sites that are allowed for the VO the ESM belongs to.

The middle layer, installed on each WN, lcg-ManageSoftware is the toolkit that steers any Application Software management process.

Finally, the low level layer is constituted by lcg-ManageVOTag, which is used to publish tags on the Information System, Tank&Spark, which is used to propagate software in case the site doesn't provide shared disk space and the gssklog client, which is used to convert GSI credential into AFS Krb5 tokens.

The ESM must prepare a tarball(s) containing the software to be installed and some scripts to install, validate, and remove the software. No constraints are imposed to the names of these scripts and tarballs. Afterwards, the ESM invokes (from the UI) the lcg-asis script by providing the name of the tarballs and/or the name of the scripts to be used for installing, removing or validating the software. If no tarballs are specified, the scripts will install software directly from some central repositories1.

The lcg-asis script performs basically the following actions:

These special jobs submitted either with lcg-asis or manually, invoke on the target WN the script lcg-ManageSoftware, which performs the following actions:

The progress of each Experiment Software management process, is updated and/or monitored by each one of the software layers provided by LCG for the Experiment Software Installation. It relies upon a schema of flavours for the TAG that is published on the Information System, for any given version of Experiment Software that requires to be installed/removed/validated. The format of the flag which is going to be published at every change of the status of the undergoing process is:

VO-<name_of_VO>-<flag-provided-by-ESM>-<status-flavour>

The flavour is a string that informs the ESM about the status of a given Experiment Software management process. It can be one of these possible values:

There are still two other possible states:

Using such flag publication mechanism is useful to avoid concurrent processes running simultaneously for the same Application Software.

The tool exposes some features that help the ESM in his activity.2It allows the VO software Manager to gather information about the software installed in all sites supporting the VO (Querying operational mode). Besides, it can automatically build the JDL files used for managing experiment software on a site, store them in a directory (Managing operational mode) and even send the corresponding jobs. It will be duty of the Software Manager to verify they ended correctly and collect the output.

The former operational mode is invoked with the command (from a UI) lcg-asis -status. This mode requires a set of general-options and some other specific status-options.

One can run lcg-asis in a Managing operational mode using the options -install, -validate or -remove and passing some other specific (manage-options) together with the general options. The -install and -validate options can be used simultaneously to install software and validate it on the fly.

general-options

The -filter option requires a bit more exhaustive explanation. The syntax of this option inherits from the lcg-info command. The list of attributes which can be used to make a filter are listed by issuing the command:

$ lcg-info --list-attrs

An example of a filter that looks for CEs that have at least one CPU free and no more than one waiting jobs follows:

--filter "FreeCPUs >=1,WaitingJobs <=1"}

The comma is interpreted as an AND. No blank spaces must be included in the expression. Possible operators are: =, >=, <=

status-options (used with the -status options)

manage-options (used with the -install, -validate and -remove options)

The command lcg-ManageSofwtare must be invoked by providing several arguments that must be passed in the Arguments field of the JDL.

First, the action to perform must be specified. This will take one of the following values:

There are quite few other informations that should be provided.

The lcg-ManageVOTag command can be used to manage the contents of the

GlueHostApplicationSoftwareRunTimeEnvironment attribute of

the IS, for a particular CE.

Its different options can be used to add (-add option) a new tag for installed software, show the list of published tags for a given VO (-list option), remove (-remove option) a defined tag, or clean (-clean option) everything that had been previously published.

This tool can be handy in several situations, such as when an error in the information published has to be corrected, or when the tag for some software needs to be manually added. Notice that this will not be the case if the lcg-ManageSoftware command was used, since that tool automatically publishes the information tag for an installed and validated software. However, if the ESM chooses to use another method for the installation (e.g.: asking the site administrator to manually install the software), then he must make sure that the software is correctly published in the IS; and for that he can use the lcg-ManageVOTag command.

The following arguments have to be passed:

The structure of the TAG indicating a software available on a given site looks like:

VO-dteam-lcg_utils-4.5

For the sake of completeness, the Tank & Spark mechanism is shortly described in this section. More detailed information about its design and functionality and about how to install and configure it are available in [R2] and [R3].

Tank & Spark is a mechanism based on the P2P technology that allows for a synchronization of the Software among WNs on sites that do not provided dedicated space through a shared area.

Spark is the client, called by lcg-ManageSoftware and run in a WN. It contacts a server (Tank) listening on the CE, in order to register the information about a new tag to be propagated (removed) into the server database. It also receives from Tank the coordinates of the repository of the Experiment Software (usually located in a SE). Spark synchronizes the local directory with the mirrored one in the repository, using r-sync.

On the other hand, the Tank service receives periodic requests for latest version of software from every WN of the site and for every VO. These are triggered by cronjobs installed on the WN. After the registration made by Spark, this requests trigger the synchronization from the central repository to the local areas of all the WNs. The software is installed in all the WNs.

Any application that needs to integrates with this service can invoke the client with the following syntax:

<LCG_LOCATION>/sbin/lcg-spark -h https://<HOST_CE>:18085 -a <action> -f <TAG> \ --vo <voname> -n <email_address>where:

This section describes a simple sample script for installing a new software on LCG-2. Guidelines about how to build (form the experiment side) the necessary scripts are also provided. A full complete list of examples that make use of the current implementation of the Experiment Software Installation mechanism can be found in [R3].

How should the script from the experiment be created? There are only few recomendations to be taken into account while writing the script:

NOTE: In order to make it fully transparent to the end user, $VO_<name_of_VO>_SW_DIR represents the root directory under which to install software and should be considered by the script.

At the end of this preparation, in this temporary directory there are one (or more) tarballs (packaged or unpackaged) and the script for installing (or validating or removing) software.

An example of a such script (install_lcg) follows:

#!/bin/bash

export TAR_LOC=`pwd` # this is the temporary directory where it is

# supposedd to be the steering script and the tarballs

wget http://grid-deployment.web.cern.ch/grid-deployment/eis/docs/lcg_util-client.tar.gz

# In this case the script doesn't need tarball from the grid but

# it fetches from the WEB requiring OUTBOUND connectivity

cd $VO_DTEAM_SW_DIR #software installation root directory

mkdir lcg_utils-4.5

cd lcg_utils-4.5

echo "running the command : tar xzvf $TAR_LOC/lcg-util-client.tar.gz"

tar xzvf $TAR_LOC/lcg-util-client.tar.gz

if [ ! $? = 0 ]; then #failure?

exit $?

endif

echo "running the command : tar xzvf $TAR_LOC/lcg-util-client-install.tar.gz"

# lcg-util-client-install.tar.gz is another tarball that has been uploaded on

# the grid and that the framework for installing software has automatically

# replicated on the $TAR_LOC directory of the WN.

tar xzvf $TAR_LOC/lcg-util-client-install.tar.gz

exit $? # This is the relevant return code

Executable = "/opt/lcg/bin/lcg-ManageSoftware";

InputSandbox = {"install_lcg"};

OutputSandbox = {"stdout", "stderror"};

stdoutput = "stdout";

stderror = "stderror";

Arguments = "--install --install_script install_lcg --vo dteam /

--tag lcg_utils-4.5 --tar lcg-util-client-install.tar.gz --notify support-eis@cern.ch";

Requirements = other.GlueCEUniqueID == "lcb0706.cern.ch:2119/jobmanager-pbs-long";

This JDL says that lcg-ManageSoftware is called on the WN of the site lxb0705.cern.ch for installing a new software (-install action) within the VO dteam. The new software is identified by the tag lcg_utils-4.5. From the option -install_script, we see that the script from the experiment that must drive the installation is install_lcg and at the end of the process, in case Tank & Spark is running on the site, a report of the process has to be sent to the support-eis@cern.ch mail address.

The use of lcg-asis hides the difficulties that using directly lcg-ManageSoftware could imply. The steps summarized in the previous section are fulfilled in one go with the command:

lcg-asis --install --tag lcg_utils-4.5 --vo dteam --install-script install_lcg /

--tar lcg-util-client-install.tar.gz

The command will visualize a template of the JDL and will let the ESM decide whether proceed, modify some field or abandon the operation.

Creating JDL files for job installation....

-------------------------------------------------------------------------------

Executable="/bin/bash";

OutputSandbox={"stdout","stderr"};

InputSandbox={"/afs/cern.ch/user/s/santinel/scratch0/grid/SInstallation/lcg-utils/install

_lcg"};

stderror="stderr";

stdoutput="stdout";

Arguments="$LCG_LOCATION/bin/lcg-ManageSoftware --vo dteam --tag lcg_utils-4.5 /

--notify roberto.santinelli@cern.ch --install --install_script install_lcg /

--tar lcg-util-client-install.tar.gz";

Requirements = other.GlueCEUniqueID == "<CENAME>";

-------------------------------------------------------------------------------

(P)roceed with JDL creation,(M)odify attr. value, (E)xit program: P

In case the ESM agrees with this JDL, the tool will loop over the selected sites complying with the specified requirements (-os, -time and -filter options) and for each site, will create the JDL (<CENAME>.jdl) into the specified subdirectory. If another TAG (in whatever flavour) is already found on the site, the tool doesn't create the JDL for this specific site.

JDL files will be created under the directory: /afs/cern.ch/user/s/santinel/scratch0/grid/SInstallation/lcg-utils/lcg-asis_install _20-04-05_13:59:00_dteam_lcg_utils-4.5 ************************************************************************************** Cluster OS OSRel. Deduced OS lxb0706.cern.ch Redhat 7.3 RH7 Selected Queue: lxb0706.cern.ch:2119/jobmanager-pbs-infinite Matching TAG(s): NO-TAG OK: preparing JDL.... **************************************************************************************

Once these JDLs have been created, the ESM is prompted to decide whether to submit these jobs or leave it for later on (with edg-job-submit or the submitter_general tool see Section [R3]).

************************************************************************************** The JDL files have been created under the directory /afs/cern.ch/user/s/santinel/scratch0/grid/SInstallation/lcg-utils/lcg-asis_install_ / 20-04-05_16:30:15_dteam_lcg_utils-4.5 Do you wish to submit the jobs? [Y/n]Y **************************************************************************************

If the ESM decides to let the tool submit the jobs (Y), the jobs get submitted and all Job-IDs will be stored in to a file under the basedir/dirname directory called submitted-job-id.list.

Tip: Instead of installing software around all sites the ESM might want to install just in somes sites, whose cluster name he knows. In this case the options to pass are:

lcg-asis --vo dteam -tag lcg_utils-4.5 --status --verbose \ -filter "Cluster=lxb0706.cern.ch" --time 150

In this example, the ESM will just install on the site lxb0706.cern.ch and the job will be submitted to the queue lxb0706.cern.ch:2119/jobmanager-pbs-long whose the MaximumWallClockTime is the shortest but it's also greater that 100 minutes (prioritizing issue).

************************************************************************************** Cluster OS OSRel. Ded.OS Q selected? Tag status lxb0706.cern.ch Redhat 7.3 RH7 YES NO-TAG Queue MaxWCT lxb0706.cern.ch:2119/jobmanager-pbs-short 5 lxb0706.cern.ch:2119/jobmanager-pbs-infinite 2880 lxb0706.cern.ch:2119/jobmanager-pbs-long (Selected) 180

In the last output it is possible to note that the status for the TAG lcg_utils-4.5 is NO-TAG indicating that on the CE selected there is not the tag published in any flavour.

After the installation of the software the same command returns:

***************************************************************************************

Cluster OS OSRel. Ded.OS Q selected? Tag status

lxb0706.cern.ch Redhat 7.3 RH7 YES SINGLE-TAG

Queue MaxWCT

lxb0706.cern.ch:2119/jobmanager-pbs-short 5

lxb0706.cern.ch:2119/jobmanager-pbs-infinite 2880

lxb0706.cern.ch:2119/jobmanager-pbs-long (Selected) 180

Tags

VO-dteam-oleole

VO-dteam-stat-accep-test-g4.7.0

VO-dteam-lcg-utils-1.2.2-aborted-install

VO-dteam-lcg-utils-2.1.5

VO-dteam-lcg-utils-3.0

VO-dteam-lcg-utils-3.2

VO-dteam-lcg-utils-2.3.0-aborted-remove

VO-dteam-lcg-utils-2.1.6-processing-remove

VO-dteam-lcg-utils-4.1

VO-dteam-lcg_utils-4.5-to-be-validated (Selected)

****************************************************************************************

Any attempt of run lcg-asis with -install option will fail because there is already a tag on the CE (SINGLE-TAG status).

This document was generated using the LaTeX2HTML translator Version 2002 (1.62)

Copyright © 1993, 1994, 1995, 1996,

Nikos Drakos,

Computer Based Learning Unit, University of Leeds.

Copyright © 1997, 1998, 1999,

Ross Moore,

Mathematics Department, Macquarie University, Sydney.

The command line arguments were:

latex2html -tmp /tmp -split 0 -html_version 4.0 -toc_depth 5 -show_section_numbers -no_navigation -address 'GRID deployment' sw-install.drv_html

The translation was initiated by on 2005-07-22